Entries in science careers (86)

Using "I language": how to communicate with your team

Friday, September 30, 2022 at 7:57AM

Friday, September 30, 2022 at 7:57AM - it is actually true. The problem that you are addressing isn't actually their action. The real problem is that this particular action hurts you. We all have different trigger points - messy bench, missed deadlines, swearing, having the radio on - each of these will cause one person to grind their teeth while another person literally won't notice. How do they know this is a sensitive issue for you without you telling them?

- it emphasizes the importance of the issue. For some people, deadlines just aren't that important, so it is hard to make them feel like deadlines are important to them. But they can understand that missing weekend after weekend with your child is important to you, and once you link the two they will get it

- it puts the other person in a zone of empathy rather than in a zone of defence. They don't feel attacked, they feel taken into your confidence, and are gaining an insight into what is important to you

- word spreads faster. People don't like to share that they got told off, but they will share that you are really sensitive to some particular things

- it works. Except for sociopaths, people don't like causing pain to other people. If you find that "you language" works better on someone than "I language", that is a giant red flag that that person has no empathy or ability to work as a team

science careers

science careers Career milestone: 200 papers

Saturday, May 28, 2022 at 10:00AM

Saturday, May 28, 2022 at 10:00AM Our new paper out at Nature Immunology was my 200th scientific paper! A good time to look back on the portfolio.

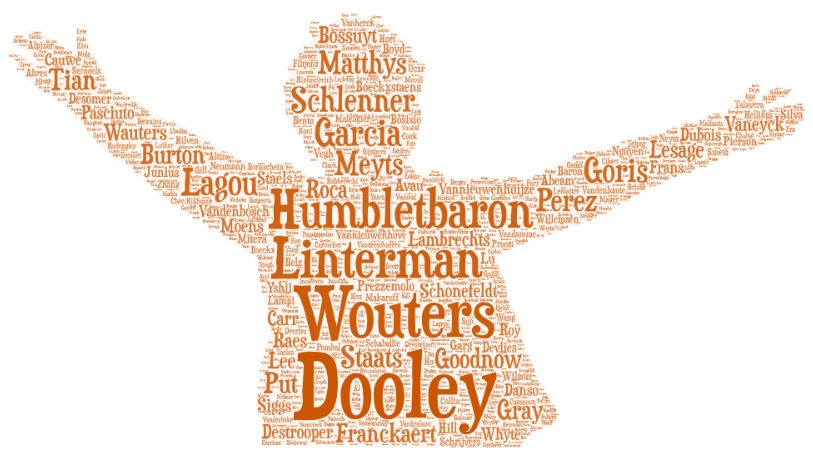

First, my coauthors:

My most frequent coauthor is James Dooley, no surprise since we've run the lab together these last 14 years! 67 articles coauthored, a good third of my papers. We've had a lot of staff and students trained in our lab over these years (165, to be exact), including a few who changed the direction of our lab - Stephanie Humblet-Baron coauthored 46 papers and Susan Schlenner coauthored 17, both are now professors at the University of Leuven. Vaso Lagou also coauthored 17 papers as a post-doc, before moving over to the Sanger. Jossy Garcia-Perez and Dean Franckaert were both PhD students, with 15 coauthorships, now working together at CellCarta. Our major collaborators come through clearly: Carine Wouters (28 papers) and Isabelle Meyts (15 papers) on the clinical side, An Goris (15 papers) on genetics, and Michelle Linterman (18 papers), Patrick Matthys (16 papers) and Sylvie Lesage (11 papers) for immunology.

My most frequent coauthor is James Dooley, no surprise since we've run the lab together these last 14 years! 67 articles coauthored, a good third of my papers. We've had a lot of staff and students trained in our lab over these years (165, to be exact), including a few who changed the direction of our lab - Stephanie Humblet-Baron coauthored 46 papers and Susan Schlenner coauthored 17, both are now professors at the University of Leuven. Vaso Lagou also coauthored 17 papers as a post-doc, before moving over to the Sanger. Jossy Garcia-Perez and Dean Franckaert were both PhD students, with 15 coauthorships, now working together at CellCarta. Our major collaborators come through clearly: Carine Wouters (28 papers) and Isabelle Meyts (15 papers) on the clinical side, An Goris (15 papers) on genetics, and Michelle Linterman (18 papers), Patrick Matthys (16 papers) and Sylvie Lesage (11 papers) for immunology.

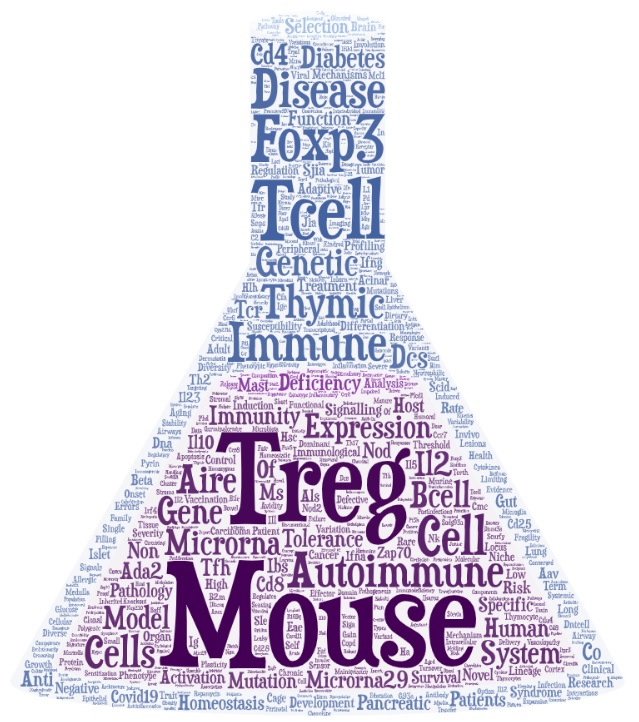

The research topics come out via the key words from the titles. Tregs, Foxp3, T cells and the thymus all leap out, but looking closely you'll see pretty much every branch of immunology represented. A special call-out to my favourite cytokine, IL2 (with 14 papers, and getting stronger) and our microRNA papers (22 papers, but it was just a phase).

The work is pretty evenly split between mouse and human, although we tend to use "mouse" a lot more in the title. In terms of topics, 88 papers work on autoimmune diseases, with 17 touching on diabetes. 40 papers intersect with cancer biology or cancer immunology, 30 papers are on primary immunodeficiencies (across both mouse and human, but spread out over so many genes and syndromes they don't pop out here). 15 papers papers are on neuroimmunology, a current strength of the lab.

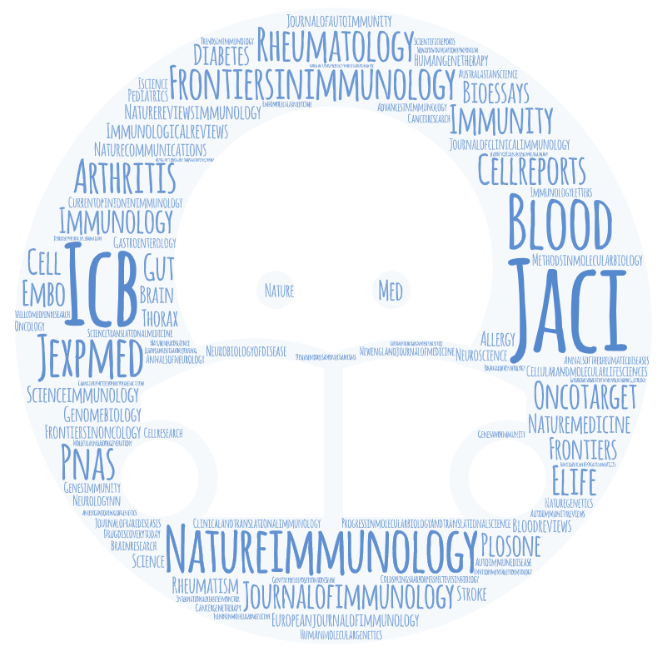

Finally, the journals that published our work! Really thrilled to see Nature Immunology up there, with 8 papers. We have a scattering of other top journals, Cell, Nature, Science, Nature Medicine, Nature Neuroscience, Nature Genetics all get a mention. Our most popular journals, however, are The Journal of Allergy and Clinical Immunology (10 papers), which has published some of our key work on primary immunodeficiency in both mouse and human, and Immunology and Cell Biology (10 papers), the Australian and New Zealand society journal (and a pleasure to work with!). I've been told by senior researchers that publishing anything below the top tier "dilutes my record", but I'm proud of all the science we do, and work to make sure that every story finds a home and every staff member or student with data gets to show it on the international stage.

Finally, the journals that published our work! Really thrilled to see Nature Immunology up there, with 8 papers. We have a scattering of other top journals, Cell, Nature, Science, Nature Medicine, Nature Neuroscience, Nature Genetics all get a mention. Our most popular journals, however, are The Journal of Allergy and Clinical Immunology (10 papers), which has published some of our key work on primary immunodeficiency in both mouse and human, and Immunology and Cell Biology (10 papers), the Australian and New Zealand society journal (and a pleasure to work with!). I've been told by senior researchers that publishing anything below the top tier "dilutes my record", but I'm proud of all the science we do, and work to make sure that every story finds a home and every staff member or student with data gets to show it on the international stage.

Liston lab,

Liston lab,  science careers

science careers In praise of metrics during tenure review

Wednesday, June 30, 2021 at 1:34PM

Wednesday, June 30, 2021 at 1:34PM Metrics, especially impact factor, have fallen badly out of favour as a mechanism for tenure review. There are good reasons for this - metrics have flaws, and journal impact factors clearly have flaws. It is important, however, to weigh up the pros and cons of the alternative systems that are being put in place, as they also have serious flaws.

To put my personal experience on the table, I've always been in institutes with 5 yearly rolling tenure. I've experienced two tenure reviews based on metrics, and two based on soft measures. I've also been a part of committees designing these systems, for several institutes. I've seen colleagues hurt by metric-orientated systems, and colleagues hurt by soft measurement systems. There is no perfect system, but I think that people seriously underestimate the potential harm of soft measurement systems.

Example of a metric-based system

When I first joined the VIB, they had a simple metric-based system. Over the course of 5 years, I was expected to publish 5 articles in journals with an impact factor over 10. I went into the system thinking that these objectives were close to unachievable, although the goals came along with serious support that made it all highly achievable.

For me, the single biggest advantage of the metric-based system was its transparency. It was not the system I would have designed, but I knew the goals, and more importantly I could tell when I had reached those goals. 3 years into my 5 year term I knew that I had met the objectives and that the 5 yearly-review would be fine. That gave me and my team a lot of peace of mind. We didn't need to stress about an unknowable outcome.

Example of a soft measurement system

The VIB later shifted to a system that is becoming more common, where output is assessed for scientific quality by the review panel, rather than by metrics. The Babraham Institute, where I am now, uses a similar system. Different institutes have different expectations and assessment processes, but in effect these soft measurement systems all come down to a small review panel making a verdict on the quality of your science, with the instruction not to use metrics.

This style of assessment creates an unknown. You really don't know for sure how the panel will judge your science until the day their verdict comes out. Certainly, they have the potential to save group leaders that would be hurt by metric-based systems, but equally they can fail group leaders who were productive but judged more harshly by biases introduced through the panel then by the peer-review they experienced by manuscript reviewers.

This in fact brings me to my central thesis: with either metrics or soft measurement systems, you end up having a small number of people read your papers and make their own judgement on the quality of the science. So let's compare how the two work in practice:

Metrics vs soft measurements

Under the metric-based system, essentially my tenure reviewers were the journal editors and external reviewers. For my metrics, I had to hit journals with impact factors about 10, which gives me around 10 journals to aim at in my field. I had 62 articles during my first 5 years, and let's say that the average article went to two journals, each with an editor and 3 reviewers. That gives me a pool of around 500 experts reviewing my work, and judging whether it is of the quality and importance worthy of hitting a major journal. There is almost certainly going to be overlap in that pool, and I published a lot more than many starting PIs, but it is not unreasonable to think that 100 different experts weighed in. Were all of those reviews quality? No, of course not. But I can say that I had the option to exclude particular reviewers, the reviewers could not have open conflicts of interest, the journal editor acted as an assessor of the review quality, and I had the opportunity to rebut claims with data. Each individual manuscript review is a reviewer roulette, a flawed process, but in aggregate it does create a body of work reviewed by experts in the field.

Consider now the soft measurement system. In my experience institutes reviewed all PIs at the same time. Some institutes do this with an external jury, with perhaps 10 individuals but maybe only 1-3 are actually experts on your topic. Other institutes do this with an internal jury, perhaps 3-5 individuals in the most senior posts. In each case, you have an extremely narrow range of experts, reviewing very large numbers of papers in a very short amount of time. In my latest review I had 79 articles over the prior 5 years. I doubt anyone actually read them all (I wouldn't expect them to). More realistically, I expect they read most of the titles, some of the abstracts, and perhaps 1-2 articles briefly. Instead, what would have heavily influenced the result is the general opinion of my scientific quality, which is going to be very dependent on the individuals involved. While both systems have treated me well, I have seen very productive scientists fall afoul of this system, simply because of major personality clashes with their head of department (who typically either selects the external board, or chairs the injury jury). Indeed, I have seen PIs leave the institute rather than be reviewed under this system, and (in my experience) the system has been a heavier burden on women and immigrants.

Better metrics

As part of the University of Leuven Department of Microbiology and Immunology board, I helped to fashion a new system which was built as a composite of metrics. The idea was to keep the transparency and objectivity of metrics, but to use them in a responsible manner and to ameliorate flaws. The system essentially used a weighted points score, building on different metrics. For publications in the prior 5 years, journal impact factor was used. For publications >5 years old, this was replaced by actual citations of your article. Points were given for teaching, Masters and PhD graduations, and various services to the institute. Again, each individual metric includes inherent flaws, and the basket of metrics used could have been improved, but the ethos behind the system was that by using a portfolio of weighted metrics you even out some of the flaws and create a transparent system.

The path forward

I hope it is clear that I recognise the flaws present in metrics, but also that I consider metrics to confer transparency and to be a valuable safeguard against the inevitable political clashes that can drive decisions by small juries. In particular, metrics can safe-guard junior investigators against the conflicts of interest that can dominate when a small internal jury has the power to judge the value of output. Just because metrics are flawed doesn't mean the alternatives are necessarily better.

In my ideal world (in the unlikely scenario that I ever become an institute director!), I would implement a two-stage review system, using 7 years cycles. The first stage would be metric-based, using a portfolio of different metrics. These metrics would be in line with institute values, to drive the type of behaviour and outputs that are desired. The metric would include provisions for parental or sick leave, built into the system. They would be discussed with PIs at the very start of review period, and fixed. Everything would be above board, transparent, and realistic for PIs to achieve. Administration would track the metrics, eliminating the excess burden of constant reviewing on scientists.

For PIs who didn't meet the metric-based criteria a second system would kick in. This second system would be entirely metric-free, and would instead focus on the re-evaluation of their contributions. By limiting this second evaluation to the edge cases, substantial resources could be invested to ensure that the re-evaluation was performed in as unbiased a manner as possible, with suitable safeguards. I would have a panel of 6 experts (paid for their time), 3 selected from a list proposed by the PI and 3 selected from a list proposed by the department head. Two internal senior staff would also sit on the panel, one selected by the PI and one selected by the department head. The panel would be given example portfolios of PIs that met the criteria of tenure-review, to bench-mark against. The PI would present their work and defend it. The panel would write a draft report and send it to the PI. The PI would then have the opportunity to rebut any points on the report, either in writing or as an oral defence, by the choice of the PI. The jury would then make a decision on whether the quality of the work met the institute objectives.

I would argue that this compound system brings in the best of both worlds. For most PIs, the metric-based system will bring transparency and will reduce both stress and paperwork. For those PIs that metrics don't adequately demonstrate their value, they get the detailed attention that is only possible when you commit serious resources to a review. Yes, it takes a lot of extra effort from the PI, the jury and the institute, which is why I don't propose it to run for everyone.

TLDR: it is all very well and good to celebrate when an institute says it is going to drop impact factors in their tenure assessment, but the reality is that the new systems put in place are often more political and subjective than the old system. Thoughtful use of a balanced portfolio of metrics can actually improve the quality of tenure review while reducing the stress and administrative burden on PIs.

Adrian Liston

Adrian Liston

Coda: obviously I have my own opinion on what makes a good reviewing system. However when I am on a review panel, even when chairing a review panel, I explicitly follow the institution rules laid out for me. If given the chance I will try to convince them that rule changes will give better outcomes, but if the system says to ignore X, Y or Z, then I ignore X, Y or Z.

Adrian Liston

Adrian Liston

With regards to the Declaration on Research Assessment (DORA). While DORA has its heart in the right place, I believe they are wrong to suggest blanket bans on particular metrics. The striking thing about DORA is that while they accurately point out the flaws of particular metrics, they don't propose actual working alternatives. In practice, this results in an institution that has removed valuable tools from their tool-kit, without clear guidence on how to replace them. Specifically, some of the metrics preferred by DORA are less suitable for the job. For example, DORA prefers H-factor over IF, but H-factor is essentially correlated to seniority and is incredibly ill-suited to measuring a tenure-track PI who may have their key papers less than one year ago. They also suggest "assessing the scientific content", without any guidence on how to do so, and the potential pitfalls that institutions fall into when trying to do this. In short, DORA opened up an important debate, but is more proscriptive than helpful.

Adrian Liston

Adrian Liston

A note on perceived value and economic theory:

Part on my thesis here, is that the high perceived value of impact factor ends up driving actual value, regardless of the fragility of the original perception. Consider metrics to be like currency - the value of the currency lies in the common belief that it has value. The more widely shared that belief is, the more stable the currency is, which is why Bitcoin fluctuates wildely compared to the Euro.

How does this analogy work when applied to impact factor? Think of a new journal starting up, and imagine they accidently got given a really good impact factor, say 8.2. Just a glitch in the system, no real value. Authors looking for a high impact factor journal see this new journal, and submit quality work. Reviewers asked to review for a new journal check the impact factor and think it is legit. They mentally benchmark the submitted work against other 5-10 impact factor journals. A year later, that accidental impact factor has solidified into a genuine impact factor of 5-10. In other words, the more people who think that journal impact factor is a measure of quality, the better a measure of quality it becomes - regardless of how flawed the original calculation was.

Does my example seem overly speculative? It isn't. I've been on the board of new journals. They are desperate to get as high a starting impact factor as possible, precisely because it creates that long-lasting mental benchmark. The fact that journals try to game impact factors and advertise increases in impact factors shows that these mental benchmarks exist widely in the community and have real impacts on the quality of submissions and reviews.

In short, any metric that is widely perceived as being correlated with quality will start to become correlated with quality, just through economic theory. Regardless of how stupid that metric was to begin with.

science careers

science careers Career trajectory

Monday, June 21, 2021 at 1:31PM

Monday, June 21, 2021 at 1:31PM Today I gave a talk on my career trajectory for the University of Turku, in Finland. Looking back on the things I did right and wrong at different stages of my career, and a little advice for the next generation of early career researchers:

science careers

science careers My Life in Science

Monday, June 21, 2021 at 1:03PM

Monday, June 21, 2021 at 1:03PM An old talk I gave on my scientific career, with an emphasis on being a parent scientist and on my experience in seeing sexism in action in the academic career pathway:

Postdoc job opportunity in the lab

Tuesday, April 13, 2021 at 2:43PM

Tuesday, April 13, 2021 at 2:43PM Happy to say we have a great job opportunity to join our lab! The position is for a bioinformatics or datascience postdoc position, starting in the Babraham Institute. The position is to lead the data analytics of the Eximious Horizon2020 project. An amazing opportunity to unravel the real-world link between environment and immunity, using the largest and most comprehensive datasets to yet be generated. I welcome applications from thoughtful scientists willing to learn the biology and search for the most appropriate computational tools to apply. Time is provided to learn and develop new skills, so consider applying even if you don't perfectly align to the project. Come join us in Cambridge!

Liston lab,

Liston lab,  science careers

science careers A cynic's guide to getting a faculty position

Friday, March 12, 2021 at 10:19AM

Friday, March 12, 2021 at 10:19AM I gave an academic caeer talk yesterday at the University of Alberta, and on request from the students I am putting the talk online. These are my personal thoughts on how the job selection process works for independent research positions in universities or research institutes, based largely on my experience, the experience of my trainees going through the process and my observations of behind-the-scenes job committee meetings. I am sure that there is enormous variation in experiences, and that systems work differently in different places: hearing the perspective of many people is more valuable than just hearing the perspective on one.

I'd also just note that this is not an endorsement of the system as it exists. There are aspects of the system that I dislike and actively work to change. But I still think it is valuable for job seekers to understand the system, warts and all, rather than believing in an aspiration system that has yet to materalise. I often hear from trainees that they career training is largely directly to non-academic careers, and they rarely hear how the academic pathway works. So, with a little too much honesty, and an expectation of landing in hot water, here is my attempt to open a conversation:

science careers

science careers Thesis acknowledgements

Wednesday, March 3, 2021 at 10:29AM

Wednesday, March 3, 2021 at 10:29AM It is so lovely to read the words of graduating students in their thesis acknowledgements. I've seen them learn and grow over the years, increase in skill and resiliency, reach depths they didn't know they have. And here they are, just leaving on to their new adventure and they stop to write kind words back to us.

These from (soon to be) Dr Steffie Junius:

Next, I would like to thank my co-promotor Prof. Adrian Liston. While on paper you’re addressed as my ‘co-promotor’, I truly perceived this as rather having two full promotors who both guided me in their own way, complementing each other. I still remember the evening in Boston when I received the email with an offer to start a PhD at your lab. The thrill to be accepted in such an environment of excellent science made me excited to become the best possible immunologist I could be. Throughout this PhD you have guided me with your advice and mentorship. Especially on the dark moments, you always were able to push me in the right direction and to follow through even when I did not know how. As PhD students, we always think the science is the most important part of a PhD, but you made me understand that personal development is just, if not more, important to becoming truly successful. Thank you for your advice and guidance over the years. The lessons you taught me will stay forever with me throughout my career.

Thank you Steffie, it has been wonderful to be part of your journey. Enjoy the next stage of your career!

science careers

science careers