A looming threat to scientific publication

Friday, February 16, 2024 at 10:20PM

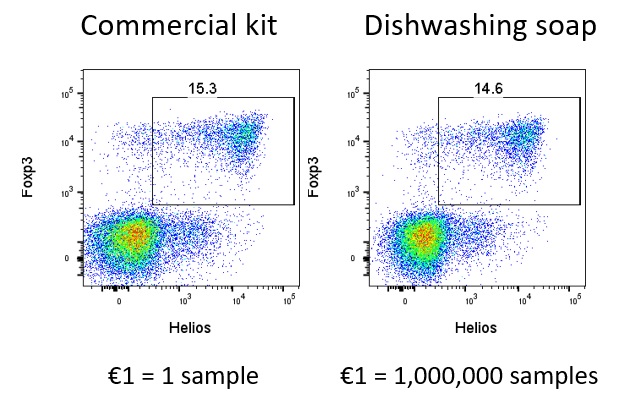

Friday, February 16, 2024 at 10:20PM  You can't argue with Professor John Tregoning, of Imperial College: these graphics are "objectively funny".

You can't argue with Professor John Tregoning, of Imperial College: these graphics are "objectively funny".

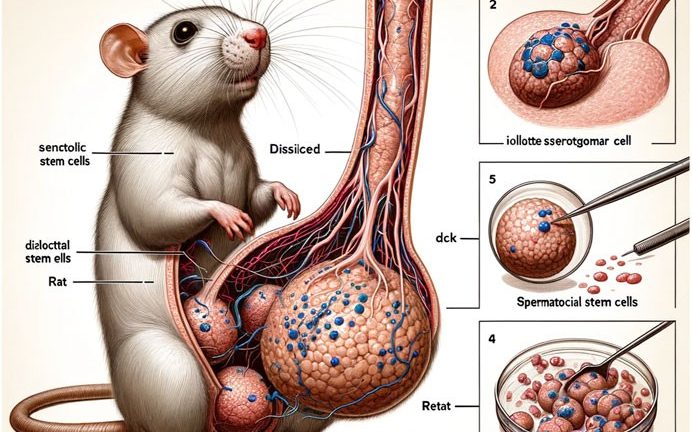

But beyond the snickering, there is a reason why the biomedical science community is in uproar over this paper.

It is a failure of peer review that this article was ever published in a scientific journal. Scientific articles are meant to be peer reviewed, precisely to catch garbage articles like this. No system is ever 100% perfect, and science is a rapidly-moving self-correcting ecosystem, but this is just so... prominant... a mistake, how did it happen?

To understand, it is important to recognise that scientists have been aware of the short-comings of peer review for years (and there are many!). The scientific publishing system is flawed: it is hard to find anyone that would argue against that. Unfortunately many of the "solutions" have made the problem worse.

"Open access publishing" opened up science to the world. Rather than "pay to read", scientists "pay to publish". On the plus side, the public can access scientific articles cost-free. On the down-side, it provided a market for pseudo-scientific journals, "predatory journals" to open up and accept any "scientific" article that someone is willing to pay to publish. One of the leading journals in the "open access" movement was "Frontiers". They genuinely transformed the style of peer review, making it rapid, interactive and very, very scaleable. Unfortunately the utopian vision of the journal clashed with the perverse economic incentives of an infinitely scaleable journal that makes thousands of dollars for every article it accepted. I was an early editor at the journal, and soon clashed with the publishing staff, who made it next-to-impossible to reject junk articles. I resigned from the journal 10 years ago, because the path they were taking was a journey to publishing nonsense for cash.

Fast-forward 10 years, and Frontiers publishes more articles than all society journals put together. Frontiers in Immunology publishes ~10,000 articles a year; as a reference, reputable society journals such as Immunology & Cell Biology publish ~100 papers a year. Considering Frontiers earns ~$3000 per article, it is a massive profit-making machine. The vision of transforming science publishing is gone, replaced with growth at all costs. Add onto this a huge incentive to publish papers, even ones that no one reads, and it added to a perverse economy, with "paper mills" being paid to write fake papers and predatory journals being paid to publish them, all to fill up a CV.

Generative AI turbo-charges this mess. Some basic competency at using generative AI, and scamming scientists can rapidly fake a paper. This is where #ratdckgate comes in. The paper is obviously faked, text and figures. Yet it got published. A lot of failures in the system here, in particular perverse incentives to cheat, the generation of an efficient marketplace for cheating, and a journal that over-rode the peer reviewers because it wanted the publication costs.

As the Telegraph reports, this is "a cock up on a massive scale".

No one really cares about this article, one way or another. Frontiers has withdrawn the article, and even congratulated itself on its rapid action for the one fake paper that went viral, without dealing with the ecosystem it has created. The reason why the scientific community cares is that this paper is just the tip of the iceberg. The scientific publishing system was designed to catch good-faith mistakes. It wasn't designed to catch fraud, and isn't really suitable for that purpose. Yes, reviewers and editors look out for fraud, but as generative AI advances it will be harder and harder to catch it, even at decent journals. It is an arms race that we can't win, and many in the scientific publishing world are struggling to see a solution.

There are many lessons to be learned here:

- The scientific career pathway provides perverse incentives to cheat. That is human nature, but we need to change research culture to minimise it

- Even good-intentions can create toxic outcomes, such as open access creating the pay-to-publish market place. We need to redesign scientific publishing fully aware of the way it may be gamed, and pre-empt toxic outcomes

- Peer review isn't perfect, and isn't even particularly good at catching deliberate fraud. We probably need to separate peer-review from fraud detection, and take a seperate approach to each

- Scientific journals range radically in the quality of peer review. We need a rigorous accreditation system to provide the stick to publish journals that harm science

- Generative AI has huge potential for harm, and we need to actively design systems to mitigate those harms

Improving scientific publishing is a challenge for all of us. In a world where science is undermined by politics, we cannot afford to provide the ammunition to vaccine deniers, climate change deniers, science skeptics and others who want to discredit science for their own agenda. So we need to get our house in order.

scientific method

scientific method